Businesses today operate in a world where change is constant, choice is unlimited, and the customer is fickle. How do companies navigate uncertainty and waning loyalty?

By activating enterprise data to improve the customer experience, and building digitally agile services that quickly respond to change.

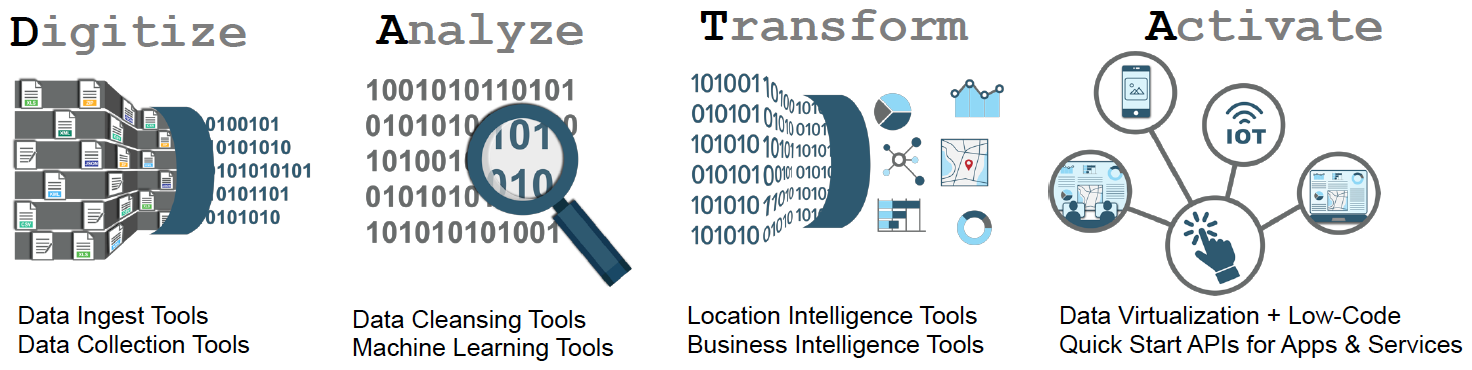

In our previous article – Digital Transformation Simplified, we discussed how enterprise data architecture is key to making data and algorithms truly democratic and self-serve and for enabling digital transformations. We discussed the four phases of the digital transformation process – Digitize, Analyze, Transform, and Activate. In this article, we focus on the Activate phase and show how a Customer eXperience Platform (CXP) enables digitally agile services and new customer and business experiences using enterprise data as a service.

The Increasing Cost of Data

Information technology and automation, when done right, improves operational efficiencies and lowers costs. But despite automation and technology driven efficiencies, software development and IT services costs continue to rise.

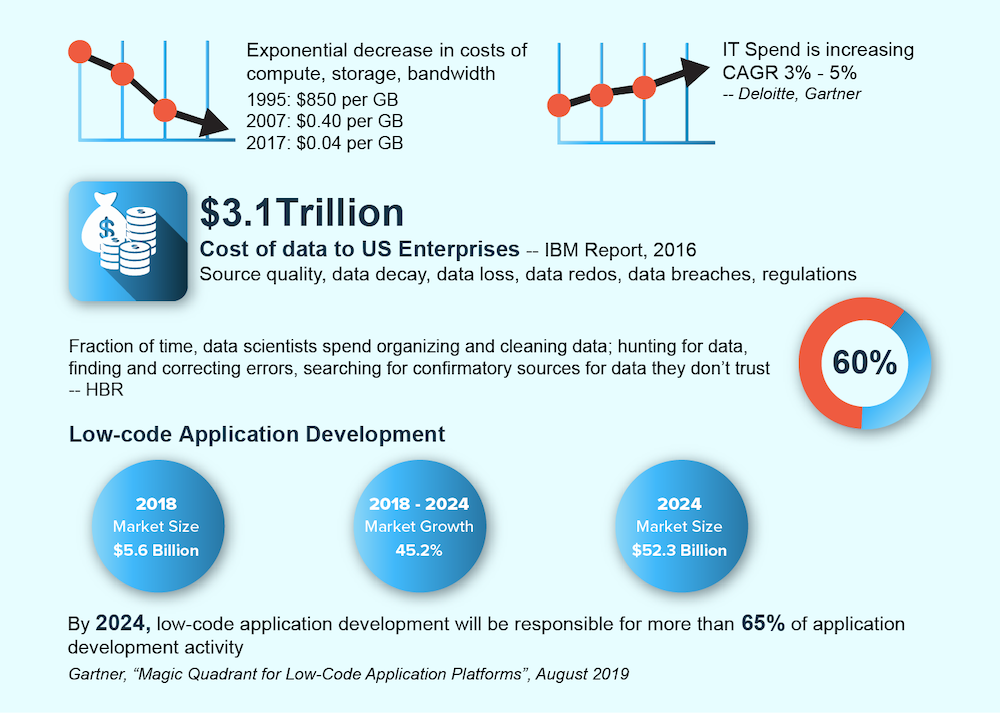

Compute, storage, and bandwidth over the years have seen exponential declines in costs. Programming languages like Java that virtualized hardware (with its write once run anywhere model) significantly brought down software development and porting costs. The move to the cloud and serverless computing has brought down time-to-market and reduced deployment and operational costs. However, despite declining hardware costs and improvements in programming technologies, enterprise IT spend is increasing year over year.

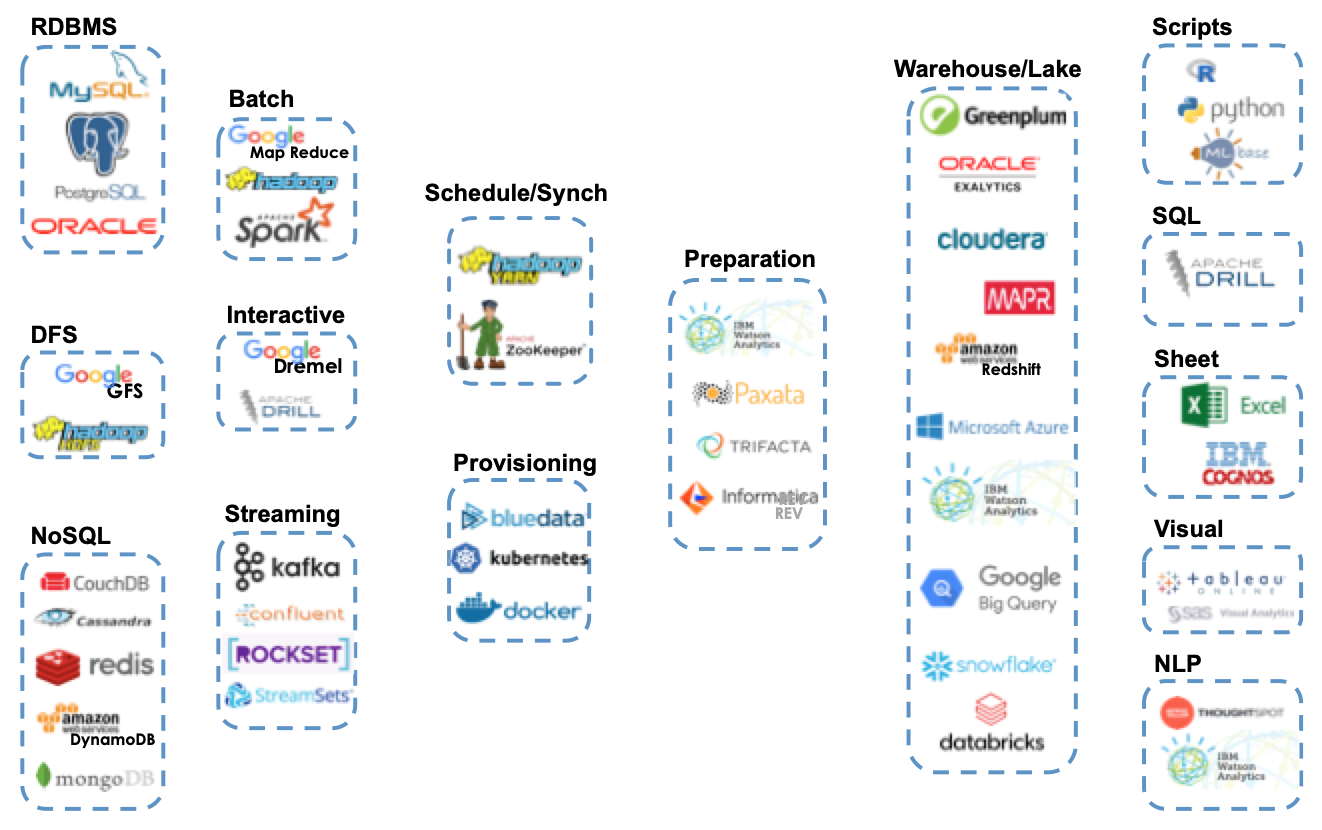

A significant factor in the increase in IT services and software development costs is managing and mastering data. While we have been able to virtualize compute, storage, and bandwidth, data is still not virtualized. The volume, variety, and velocity of data still determine the tools and platforms of choice — different platforms, skills, and personnel are required for managing small and big data, structured and unstructured data, streaming and non-streaming data, numerical and location data.

A significant factor in the increase in IT services and software development costs is managing and mastering data. While we have been able to virtualize compute, storage, and bandwidth, data is still not virtualized. The volume, variety, and velocity of data still determine the tools and platforms of choice — different platforms, skills, and personnel are required for managing small and big data, structured and unstructured data, streaming and non-streaming data, numerical and location data.

As anyone who has worked with business data knows, even with all kinds of tools to manage the volume, variety, and velocity of data, most of the time is actually spent on data quality and veracity. An IBM report estimates this cost to US enterprises at $3.1 Trillion annually. It is the small things – names that are misspelt, incomplete addresses, telephone numbers with area codes and some without, incorrect tagging, images that have to be resized or even redone, locations in UTM coordinates that have to be normalized to lat/longs, identifying duplicates, disambiguating identities, correcting data bias. And the farther downstream the data is processed from the source of data, the higher the costs of correcting and cleansing it.

An industry response to the increasing software and services costs and the shortage of programmers with the right skills is the move to Low-Code. A Gartner report estimates that by 2024, 65% of all application development activity will involve low-code.

The Promise of Low-Code

Low-code platforms promise rapid application development at lowered costs. Low-code reduces code from coding with visual programming using drag-and-drop widgets and built-in data connectors that enables designers and non-programmers to create data driven applications. The term low-code is of recent origin but rapid application development tools are not new. They have a history that goes back to Visual Basic, Oracle Forms and many others. Container Managed Persistence tools allowed server-side programmers to store and retrieve data from a database without writing any SQL code.

Low-code tools from the past have clearly not stood the test of time. Even though applications could be quickly prototyped, the lack of options, functionality, and flexibility did not allow them to scale well.

More recently, however, low-code solutions have had success and lowered business costs in processes and workflows that are well understood. Designers and non-programmers routinely use Content Management Systems (CMS) to design and build websites. Designers can create interactive content with no-code tools and the software-generated code easily embedded into websites. Quick app builders like Google AppSheet and Amazon Honeycode allow non-programmers to quickly prototype and automate simple business functions. Automating repeatable processes and data pipelines have reduced the coding required for custom apps in verticals like device automation, IT automation, and sales and customer data automation.

Low-code solutions have had impacts and lowered costs in business processes and workflows that are well understood. However, visual coding and data connector templates are not enough for more general business processes. Citizen developers create another problem. The number of apps within an organization increases and the data is now captive within these apps, which actually worsens the data silo issue. In addition, without proper data governance and oversight, these apps may introduce new security vulnerabilities that can be exploited.

Low-code solutions have had impacts and lowered costs in business processes and workflows that are well understood. However, visual coding and data connector templates are not enough for more general business processes. Citizen developers create another problem. The number of apps within an organization increases and the data is now captive within these apps, which actually worsens the data silo issue. In addition, without proper data governance and oversight, these apps may introduce new security vulnerabilities that can be exploited.

Low-code does lower the barrier to coding. It allows executives, managers, interns, and other citizen developers to quickly churn out low-code apps that automate some business function. However, without proper enterprise data governance and without CDO office oversight, what you end up with is spaghetti software that will soon become IT’s nightmare.

Making good software is hard. Enterprise data when managed properly can be a core strategic asset and an enterprise’s data architecture can be the key business differentiator in creating new business and customer experiences. The solution is not piece-meal automation and citizen developer apps; the end result of which is more siloed and possibly insecure data. The solution is a reimagined enterprise data infrastructure with the right tools and platforms to ingest, manage, and activate data to create timely information and actionable insights that improve customer engagement and retention.

A-La-Carte Data Stack

Enterprise digital transformation is about creating value for customers and timely and actionable information and insights for the business. Every other day there are new customer data platforms being marketed that provide a unified view of data offering a 360-degree insight into the customer.

While marketers and managers talk of the business value of a unified view in terms of improving the customer experience, increasing customer engagement and retention, and getting close to the customer through one-to-one marketing, the engineering and IT services teams think of a unified view in terms of storage, provisioning, processing, cleansing, scheduling, and pipelines that bring curated data into a centralized repository that can be queried, analyzed, and visualized.

The data landscape shown in the figure below shows the complexity of what is needed in building out a unified data view and analytics infrastructure. Each technology block manages one aspect of the complete solution – data storage, data processing, data provisioning, data scheduling, data cleansing, data warehouse, or data access. Some vendors may package several of these different blocks into one offering.

Business data is typically a mix of small and big data, streaming and not so frequently updated data, identity based and location based data, relational and non-relational data, structured and unstructured data. In addition, this data spans departments and systems. Digital-native enterprises may use RDBMS to provision assets and resources, use a NoSQL service to cache and speed access to data, use a Streaming data solution to manage event logs, clickstream data, sensor data and IoT feeds, and use batch and interactive processing to create summarized data and dimensional rollups in a data warehouse for easy reporting and analytics.

Building out a general enterprise analytics infrastructure is complex, challenging, and costly. It also requires buy-in from the data owners who may not see the business value in sharing the data and lack the incentive in making sure that the data is good and current. A-la-carte data stacks tend to be engineering and IT services driven initiatives where usually the data and context owners are far removed from the analysis.

Digital Transformation Platforms

Building out a data stack using best-of-breed components is complex and challenging. It requires engineering and IT resources that are expensive and integrating the different components together is a high-skill and time-consuming process. An alternative is to start with an off-the-shelf integrated suite of tools and services and build solutions around the selected platform.

The figure below shows the evolution of the digital transformation platforms. ERP solutions automate common internal business workflows. ETL/EAI solutions focus on data integration and provide data warehouses that can be queried as needed for business operations. A Data Lake provides a centralized data repository as a service with compute nodes that run ML and AI workloads and provides low-code analytics and visualizations to guide in improving business operations and decisions. RPA solutions provide low-code scripts that automate business functions and data pipelines.

Customer Data Platforms pull all customer information – transactional data, call-center interactions, first-party data from the company’s web and mobile channels, social media interactions, into one centralized data repository that provides customer insights and can be accessed to improve the customer experience and enable personalized marketing to deliver a targeted ad to an individual consumer.

For many large enterprises, it is not a question of choosing the right platform. They may have use-cases and developed expertise in all of the above. Legacy ERP software usually coexists with ETL/EAI workloads, with engineers using Data Lakes for machine learning workloads, with marketing using a CDP, and RPA scripts tying different systems together and automating the data pipelines. Enterprises today don’t lack for information technology. What they lack is the ability to convert enterprise data into timely information and business value.

A CDP is typically owned and operated by marketing and sales but engineering and IT services have to be involved in bringing the data in and keeping it updated. A successfully deployed CDP segments customers into personas and optimizes marketing efforts by enabling personalized marketing to cohorts or individuals. To create digitally agile services, the CDP has to be connected directly to product, marketing, sales, and post-sales digital platforms. When the analytics platform is connected to the other digital platforms, data driven services allow for targeted and relevant offers, up-selling products and premium service offerings, and dynamic pricing, each of which can drive new revenue streams. Integrating these different platforms is complex and challenging and will again involve engineering and IT services.

Hawkai Data CXP (Customer eXperience Platform)

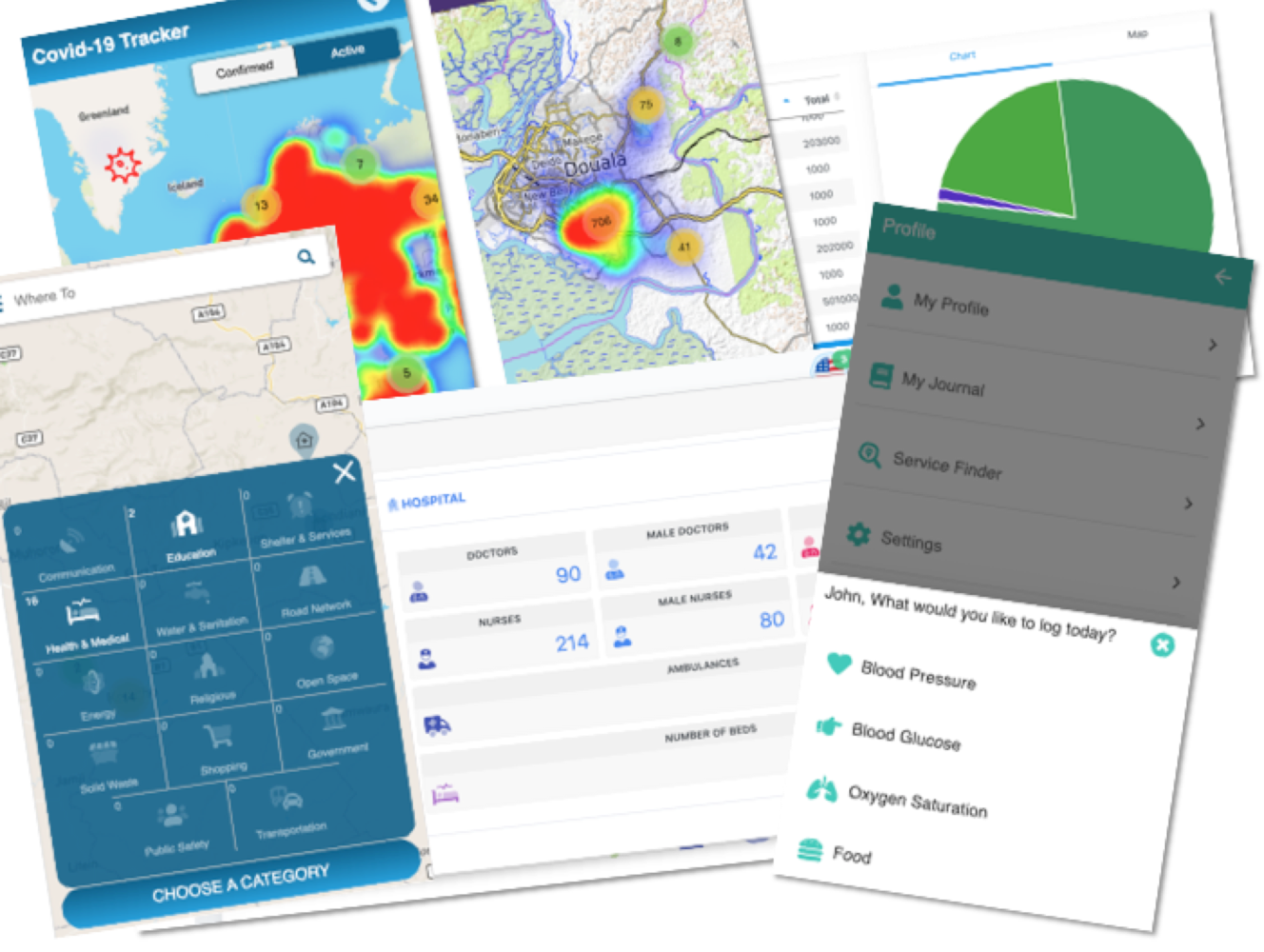

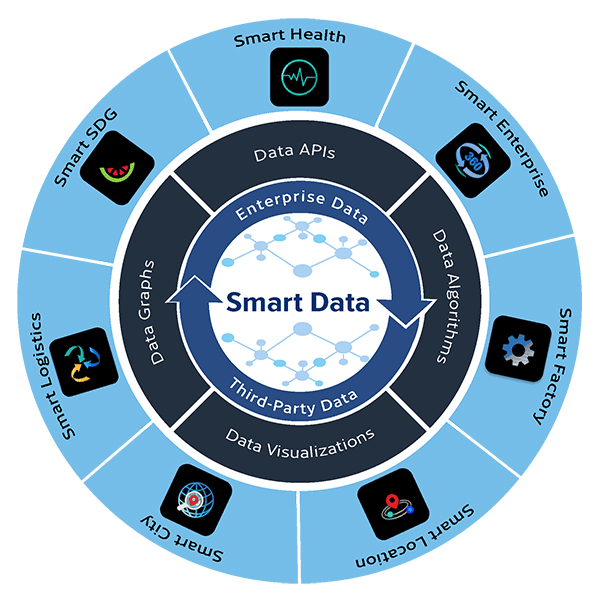

Hawkai Data CXP is not just an analytics infrastructure solution for the enterprise that democratizes data visualizations. It is a data virtualization platform that provides APIs to access enterprise and external datasets. Customer experiences can be quickly prototyped, piloted, and operationalized by scaling out the data.

Hawkai Data CXP provides a DATA (Digitize, Analyze, Transform, Activate) process and tools that an enterprise uses to enable digital transformation. The Digitize, Analyze, and Transform tools ensure that the data in the Activate phase is consistent, correct, complete, current, and actionable.

Activation is the process of building out applications and cloud services using the actionable data. Hawkai Data CXP simplifies the building of data-driven applications by providing a uniform and fast access to enterprise data via its data APIs. Client-side programmers use the APIs to access data and micro-services to build out data driven apps and services.

Pre-loaded with third-party datasets, pre-integrated into third-party micro-services, and packaged with visualization and data algorithm libraries, Hawkai Data CXP is a cloud-based data platform that makes enterprise data instantly actionable and accelerates an enterprise’s digital transformation journey by enabling new business and customer experiences.

Activated third-party data

Hawkai Data CXP provides direct access to third-party datasets and micro-services including IP to geo database, population and demographic databases, neighborhood amenity data, regional shapefiles, etc. The Location 360 service APIs provide data insights about any location – population characteristics, age and gender breakups, demographics. This data provides the foundational data with which other data sets can be merged and layered. Whether a business is looking to see where to put a new store, an insurance company looking for data on general health characteristics of a suburb, tracking sustainable development goals at the local level, etc., the Location 360 service APIs provides the base data on which other datasets can be layered.

No-code Visualizations and Algorithms

Hawkai Data CXP provides no-code customizable visualizations and configurable algorithms that accelerate the prototyping and piloting of new apps and services. Easy configurations and controls allow for customized visualizations and a standard way to access business and location data.

Customizable Views

Hawkai Data CXP provides customizable views at log in. Depending on the organization, the user gets to see and manage the apps and services that are specific for the user and organization. Users can be configured hierarchically into groups within an organization with data visibility limited by the level in the hierarchy. New apps and services can be enabled by user group or by organization.

Downstream Data Visibility

One way to make sure that the data owner has an incentive to provide good data is by making sure that the data owner has visibility into the data provided and its downstream usage. For example, when a business adds a new storefront or dealership, a digitally agile service will show the data in the online store finder. When a data owner has immediate visibility into the updated data, they will take ownership of the data and make sure that the data is good and current.

Agile Apps and Services

Agile Apps and Services

Customer and business experiences can be quickly prototyped using the data APIs. A Javascript programmer has consistent APIs to access enterprise and third-party data and client-side libraries that makes it quick and easy to create new apps and services. Once the customer experience is validated, the data can be scaled out and the service operationalized.

From Smart Data to Smart Services

Do you identify with any of the personas below?

- You are an enterprise executive who has deployed ERP, EAI, and CDPs but still unsure if your marketing spend is aligned with sales?

- You manage enterprise logistics but lack visibility into the last-mile of your supply chain.

- You are a city data officer with two different vendor systems for business and location data and struggle to keep data synchronized.

- You are a government official looking to measure and benchmark sustainable development goals at local and regional levels but unsure about what metrics to track and models to use.

- You are in healthcare and looking for ways to reduce healthcare costs and improve patient outcomes.

It is time to rethink your data architecture and let the data work for you instead of you having to work for the data. It is time to start with the customer experience and your desired outcomes and work your way back to the data. It is time to add value and velocity to your digital transformation initiatives by choosing the right platform, the right process, and the right people.

Image Attributions

- Header background image is from unsplash.com

- Cartoon by Sidney Harris, published in the New Yorker (05/26/2002)

Agile Apps and Services

Agile Apps and Services