Creating data driven enterprises

Businesses are beginning to recognize data as a core strategic asset. Every enterprise today likes to think of themselves as data driven or is trying to reinvent themselves to be data driven. Many enterprises are hiring and building data science talent within the company to help in this digital transformation.

Successful data teams within an enterprise usually consist of software and database experts, experts in statistics and data visualization, machine learning domain experts, and business experts who can translate a business problem into a complementary data problem.

While the expertise required from a data science team is well understood, what is less clear is the structuring of these teams and the tools and platforms required to extract business value from business data. Should the team be under a Chief Data Officer who orchestrates the gathering of data and is responsible for providing the necessary insights and business value from the collected data or should the data teams be more decentralized, spread across business functions and provide the necessary data expertise within that business function?

Data when moved away from its context rarely leads to insights and data that remains within its business silo rarely provides a complete picture. To be of value, data must remain close to its context and must remain a self-serve function where whoever needs the data can access it seamlessly across business functions whenever they need it.

Understanding Enterprise Data

Enterprise-wide data platforms are in general an after thought. Businesses deploy enterprise apps to automate business functions. On average, medium-sized businesses may use over 10 applications and for large enterprises, it could be several hundreds. The data itself gets siloed within these apps. Each application provides its own reporting and analytics but in isolation can at best deliver fragmented analytics and possibly misleading insights. Over time, it gets harder and harder to make data driven decisions as the challenge becomes that of application data integration. In most businesses today, the steps towards unlocking the value of the data within the business is to de-silo the data, transform and normalize the data into one giant data warehouse and then running analytics on the data warehouse. Such standard data integration solutions from ETL, EAI and Data Lake vendors, however, just end up creating yet another data silo with the content and context owners far removed from the analysis.

Data Silos

Enterprises are organized as functional silos, each silo having its own departments, teams, people, applications, and data. These functional silos, by their very nature tend to isolate teams and people, with each team managing their own applications and data. Data sets remain separate within each silo, often duplicated and without any cross-references.

The figure below shows organizational silos that are common to many, medium to large enterprises. The figure is for illustrative purposes only to show the diversity of apps and is not meant to be by any means representative or exhaustive. These silos don’t just isolate the people and the teams but the data remains captive within each application and not easily available in a way that could create a whole picture for the business to succeed.

It is generally assumed that within a silo the data is integrated and the problem is that of the horizontal integration of data. This is not necessarily true. Many of these organizational silos use multiple applications and the data within these applications is rarely integrated.

To take an example, consider the marketing department that is responsible for running marketing campaigns. A marketer may decide to split the campaign budget across email marketing, TV marketing, search, mobile and digital ad serving, and inbound content creation. Each marketing channel will typically require a separate application and executing the campaign may require applications as diverse as Mailchimp, Marketo, Google Ads, Meta, TradeDesk, etc. Each application produces its own analytics and reports and provides a fragmented picture of campaign performance. To get the full picture, the data collected from the campaign has now to be integrated from across these applications and merged with conversion data from the website (Google Analytics, etc.). Data integration vendors will allow this data to be combined and analyzed but it requires custom solutions and managing this data is complex, costly and time-consuming.

ETL/EAI/Data Lakes

Many vendors consider the data silo issue as an application integration problem and provide solutions and tools to extract data from each of these apps, transform and load into a different database/data warehouse for reporting and analytics. When data is moved around, there is a loss in detail as data that seemed not relevant or unimportant may turn out to be crucial later. In addition, many functional units may have their own technical, cultural, and political reasons for not wanting to share their data.

Data integration is typically an expensive, complex, custom and time-consuming task. When implemented successfully, what you end up with is another database that is queried for analytics and reporting. This new database or data warehouse becomes yet another data silo that can be accessed only by data experts who usually do not have the context nor the business expertise to translate the data into business value.

This data movement creates another issue – multiple copies of the data exist, one where it was created and is updated and the other in the data warehouse or data lake. It is not uncommon for further copies to be provided to other parts of the business from the data warehouse. Not only are there now multiple copies of data, the ownership of the master copy is not well defined and security policies applicable to the master are rarely maintained on the copies.

This data movement creates another issue – multiple copies of the data exist, one where it was created and is updated and the other in the data warehouse or data lake. It is not uncommon for further copies to be provided to other parts of the business from the data warehouse. Not only are there now multiple copies of data, the ownership of the master copy is not well defined and security policies applicable to the master are rarely maintained on the copies.

Data Net

Data integration solutions and data warehouse vendors move the data away from the context and the data owners. A Data Net keeps the data with the data owners but makes transparent the connections between the data and other datasets within the organization. In addition, external datasets can be acquired from data providers and made available across the enterprise without any replication or ambiguity in terms of ownership. This allows the data owner who has the context of the data and the business function to cross-reference and pull related data in and do self-serve analytics on their data.

In a Data Net, the data stays with the data owner. The data owner controls the visibility and access to the data. The data owner discovers related data sets by a process of discovery. As new data sets become available within the data net, the data owner can discover unique ways to combine the data in a process that is user driven.

In a Data Net, the data stays with the data owner. The data owner controls the visibility and access to the data. The data owner discovers related data sets by a process of discovery. As new data sets become available within the data net, the data owner can discover unique ways to combine the data in a process that is user driven.

Do the following responses sound familiar?

- I don’t have access to the data and the person who can get the data is not available

- I will need at least a week to get that data

- The dataset that you requested is too big

- I don’t have the latest data

- I can’t give you data in that format

And this is after you have deployed ETL, Streaming Data and Big Data tools. If you are an executive or a manager and you hear these data excuses on a frequent basis, your data tools are not working for you. The solution is to make data and algorithms truly democratic, self-serve and user centric by reimagining your data architecture as an enterprise-wide Data Net.

An Enterprise Data Net is characterized by the following:

- No Schema – The Data Net does not need any prior knowledge of schema to ingest data.

- Auto type detection — The Data Net auto-detects the type of columns for most common types of data.

- Auto-Indexed – The Data Net automatically indexes and stores data efficiently for fast querying and retrievals.

- Data Reliability – Data that is saved in the Data Net is reliable, available and recoverable.

- Conversational access to data – Access and retrieval of data must involve no-code.

- Data Discovery – the Data Net makes it easy to find similar data sets, discover cross-references across data sets, and be able to join and create aggregated data.

- Data Security – the Data Net provides secure access to data with appropriate security policies to enable collaboration.

- Data Preparation – The Data Net provides tools to cleanse data, de-duplicate, flag outliers, and check the quality and integrity of data.

- Data APIs – The Data Net provides secure access to the stored data via Data APIs. The data APIs allow the data to be retrieved in the format required by the application and at a rate as desired by the application.

- Data Visualizations – data can be transformed into the most appropriate visualizations using No-Code.

- Data Algorithms – The Data Net includes all standard algorithms that can be applied to the data. These algorithms will include statistical and transform functions, machine learning algorithms, geospatial functions, and others.

- Third-Party Datasets – The Data Net is pre-loaded with public datasets including IP to geo database, population databases, neighborhood amenity data, regional shapefiles, etc. It is easy to add third-party public datasets to the Data Net and make it available to its users.

- Scale – The Data Net scales data access to the demands of the application.

This enterprise-wide data platform provides data as a service and will enable managers and executives at all levels in an enterprise to collaborate and make data driven decisions that can add business value and improve operational efficiencies.

This enterprise-wide data platform provides data as a service and will enable managers and executives at all levels in an enterprise to collaborate and make data driven decisions that can add business value and improve operational efficiencies.

Hawkai DataNet

The Data Net at its simplest is a productivity tool that allows individuals to work with data, analyze, transform and visualize the data. With proper data governance, the Data Net becomes an enterprises’ shared data repository, where individuals can discover other data, aggregate, engage and collaborate with other individuals across the enterprise. With a shared data repository and the Data Net APIs, the Data Net becomes a platform on which new applications and services can be quickly prototyped, scaled, and operationalized.

Hawkai DataNet implements a data net that makes it easy to ingest, analyze, and transform data using no-code. Once the data is ingested and cleansed, the data APIs are used to create new services and apps using low-code. Hawkai DataNet provides the tools and a DATA (Digitize, Analyze, Transform, Activate) process that an enterprise can use to enable digital transformation and create new business and customer experiences.

Digitize Data

Digitize Data

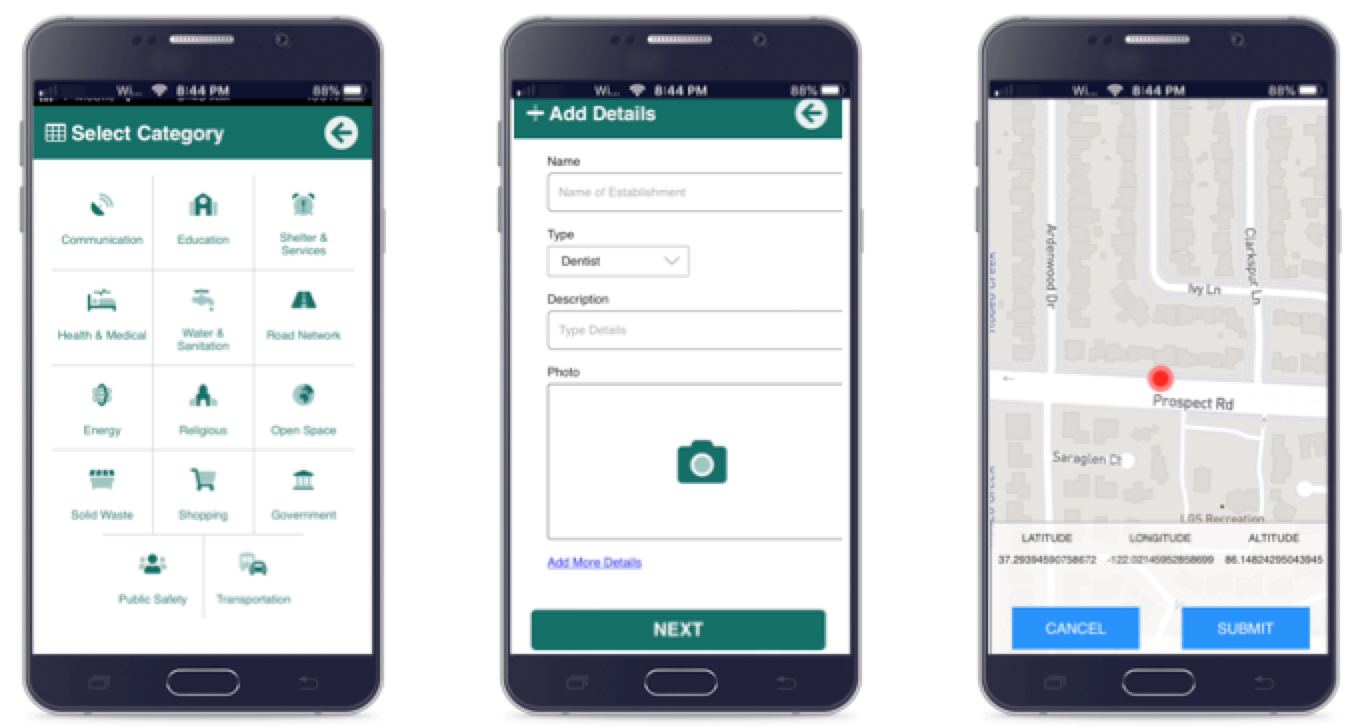

The digitize phase is about getting data into the Data Net. Hawkai DataNet provides tools to ingest most common data files including CSV, XLS, JSON, XML data. The data types are auto-detected and indexed appropriately. IoT devices can send data directly to the Data Net using APIs. Hawkai DataNet also provides flexible data collection tools that can be used for collecting field data using survey forms and uploading it directly into the platform.

Analyze Data

The quality and integrity of data have to be determined before use in any business process. Poor quality data will lead to misleading analytics, erroneous insights and have significant business consequences. Hawkai DataNet provides tools to check the quality of data, detect outliers, correlate data and confirm the integrity of data. The figure below shows no-code quality analysis for an example dataset.

One way to check the integrity of data is to do correlation analysis. The figure below shows no-code correlation analysis on a dataset using Hawkai DataNet.

Transform Data

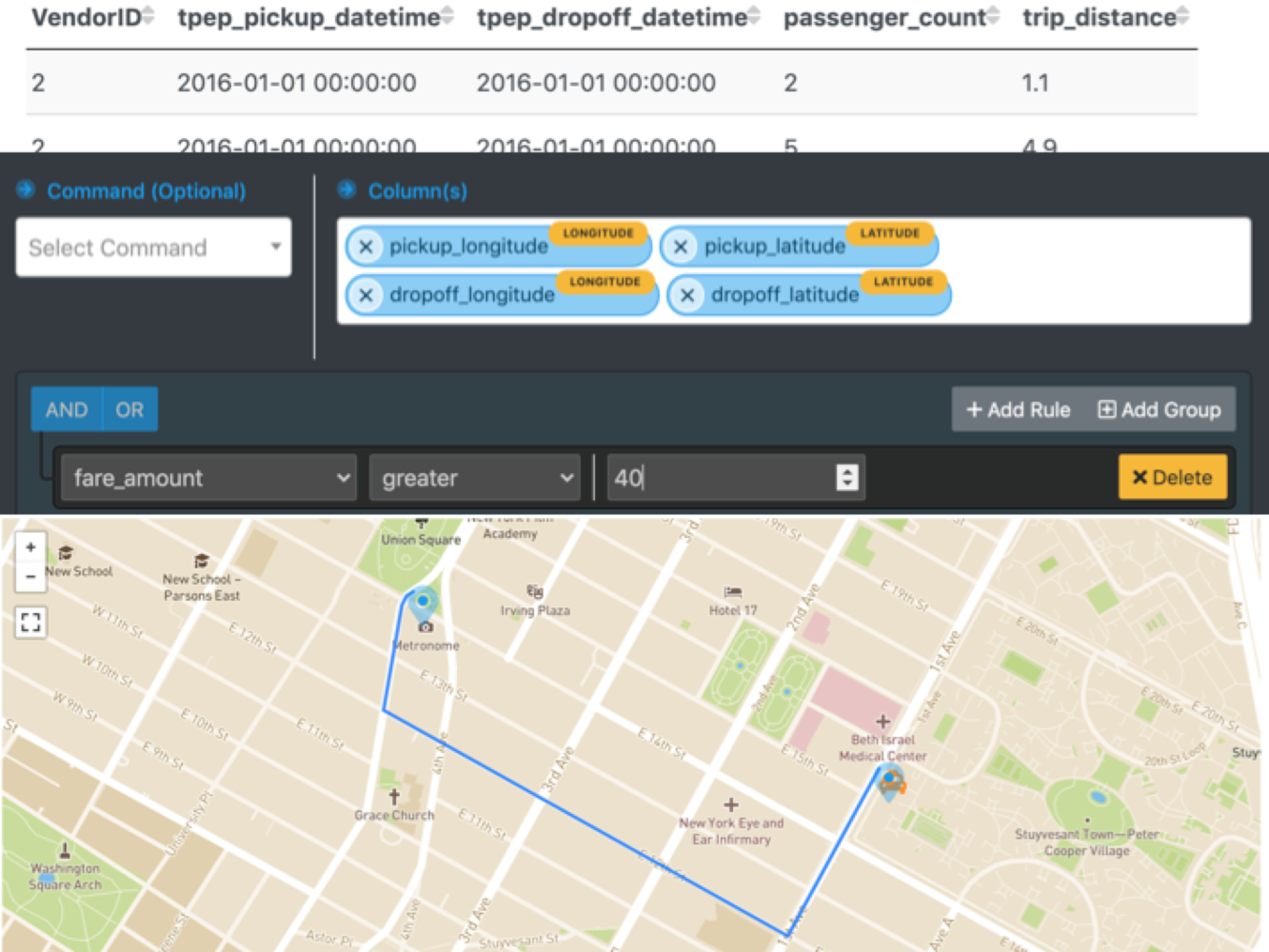

Transforming data allows data to be viewed visually and better understood. Hawkai DataNet provides business intelligence and location intelligence tools to generate no-code visualizations of the data. The figure below uses a dataset that records all taxicab pickup and drop-off points on a particular day in New York city and shows a no-code transform into a heatmap of the pickup points. The heatmap can be drilled down for insights into specific intersections where more taxicab pickups happen.

In addition, the actual turn-by-turn route taken by the taxicab can be animated without writing a line of code.

In addition, the actual turn-by-turn route taken by the taxicab can be animated without writing a line of code.

The figure below uses a dataset that records campaign impressions aggregated by month and shows how a time-series chart is generated with no-code.

The figure below uses a dataset that records companies registered by region/state for a particular year and shows how data type auto detection and pre-loaded third-party region shapefiles are used to generate a no-code interactive visualization.

The figure below uses a dataset that records companies registered by region/state for a particular year and shows how data type auto detection and pre-loaded third-party region shapefiles are used to generate a no-code interactive visualization.

Activate Data

Activate Data

Successful implementation of the Digitize, Analyze, and Transform phases allows a business to visualize data, gather insights, and drive business actions. The business actions themselves, however, are not automated. The Activate phase is what transforms a data-informed enterprise into a data-driven enterprise. Activation is the process of building out applications and cloud services using the enterprise’s data as a service.

Building a cloud application or service typically requires many competencies – HTML, CSS, Javascript on the client side; PHP, Python, Java or similar expertise on the server side and storage-side SQL, or NoSQL expertise to store and extract data. To deploy, operationalize, and scale the application requires additional and a completely different set of skills and tools. Most of the delays and costs in building cloud based applications occur in the orchestration between the client-side, server-side and storage-side components, each of which is typically done by different teams.

Acknowledging these costs, companies routinely advertise for a full-stack engineer – a person who can seamlessly navigate and bridge issues across client-side, server-side, and storage-side components. It is not just a matter of different programming languages across the components; the programming models themselves are completely different. Client-side programming requires expertise in event-based programming, server-side programming is typically procedural, while storage-side programming is declarative and requires knowledge of data models, data normalization, and indexing. Full-stack engineers are an extremely rare breed and if you know of someone, do let us know in the comments below.

There has also been an attempt to use server-side Javascript technologies and create applications using the MEAN (Mongo DB, Express.js, Angular.js, Node.js) stack. The MEAN stack does help when a client-side programmer wants to quickly prototype a full-stack capability but this simplicity must be balanced by the complexity that comes when trying to scale and operationalize the solution.

A Data Net simplifies the building of data-driven applications by providing a uniform and fast access to data via its data APIs. A client-side programmer needs to just focus on the client-side components and workflow, program in one language (Javascript) and use REST APIs to access data as a service. Using Hawkai DataNet, an enterprise can add value and velocity to its digital transformation with no-code analytics and low-code services.

Hawkai Data CXP (Customer eXperience Platform)

An Enterprise Data Net is not just an analytics solution for the enterprise that democratizes data visualizations. It is a data virtualization platform that provides APIs to access enterprise and external data sets. The APIs are auto scaling where the platform automatically scales the data access bandwidth depending on access rate. Data driven applications and services can be easily built as the app builder has to only focus on the presentation layer. An enterprise’s data architecture will become the key business differentiator that will allow them to cost-effectively manage and curate data at scale and drive new customer and business experiences.

The Activate phase of the digital transformation leverages the DataNet platform and uses low-code to create new customer experiences, workflows, and services. How? Learn more about Hawkai Data CXP in our next blog:

The Activate phase of the digital transformation leverages the DataNet platform and uses low-code to create new customer experiences, workflows, and services. How? Learn more about Hawkai Data CXP in our next blog:

Digital Transformation Accelerated – from Smart Data to Smart Services.